Objects in JavaScript are awesome. They can do anything! Literally…anything.

But, like all things, just because you can do something, doesn’t (necessarily) mean you should.

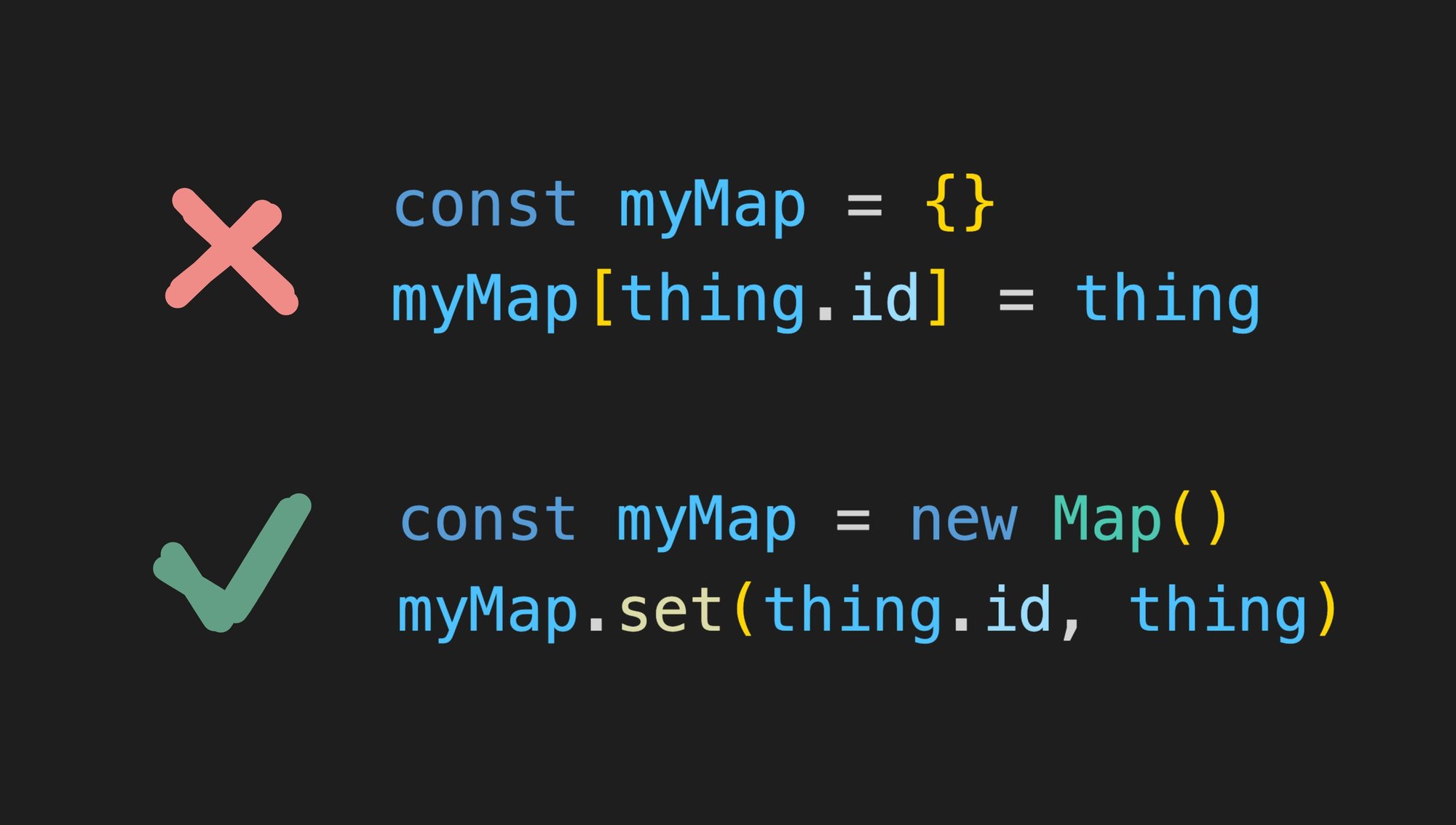

// 🚩

const mapOfThings = {}

mapOfThings[myThing.id] = myThing

delete mapOfThings[myThing.id]For instance, if you're using objects in JavaScript to store arbitrary key value pairs where you'll be adding and removing keys frequently, you should really consider using a map instead of a plain object.

// ✅

const mapOfThings = new Map()

mapOfThings.set(myThing.id, myThing)

mapOfThings.delete(myThing.id)Performance issues with objects

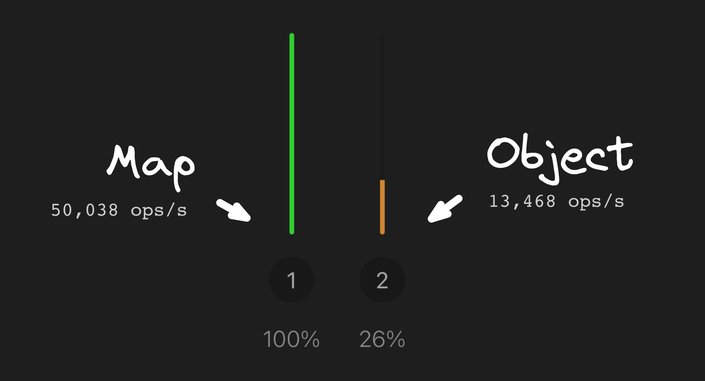

Whereas with objects, where the delete operator is notorious for poor performance, maps are optimized for this exact use case and in some cases can be seriously faster.

Note of course this is just one example benchmark (run with Chrome v109 on a Core i7 MBP). You can also compare another benchmark created by Zhenghao He. Just keep in mind — micro benchmarks like this are notoriously imperfect so take them with a grain of salt.

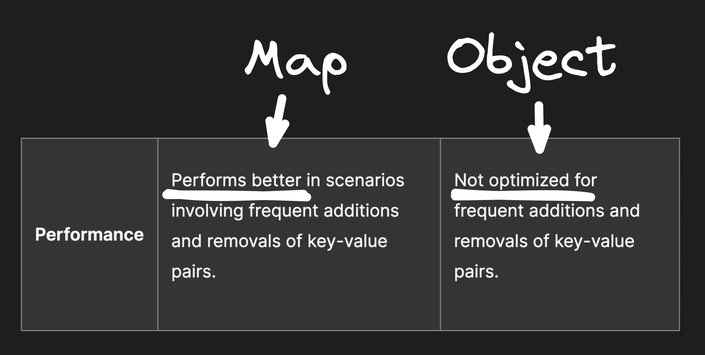

That said, you don’t need to trust my or anyone else’s benchmarks, as MDN itself clarifies that maps are specifically optimized for this use case of frequently adding and removing keys, as compared with an object that is not as optimized for this use case:

If you are curious why, it has to do with how JavaScript VMs optimize JS objects by assuming their shape, whereas a map is purpose-built for the use case of a hashmap where keys are dynamic and ever-changing.

Read more about how VMs assume shapes in this thread by Miško (CTO of Builder.io, and creator of Angular and Qwik):

Another great article is What’s up with monomorphism, which explains the performance characteristics of objects in JavaScript, and why they are not as optimized for hashmap-like use cases of frequently adding and removing keys.

But beyond performance, maps also solve for several issues that exist with objects.

One major issue of objects for hashmap-like use cases is that objects are polluted with tons of keys built into them already. WHAT?

const myMap = {}

myMap.valueOf // => [Function: valueOf]

myMap.toString // => [Function: toString]

myMap.hasOwnProperty // => [Function: hasOwnProperty]

myMap.isPrototypeOf // => [Function: isPrototypeOf]

myMap.propertyIsEnumerable // => [Function: propertyIsEnumerable]

myMap.toLocaleString // => [Function: toLocaleString]

myMap.constructor // => [Function: Object]So if you try and access any of these properties, each of them has values already even though this object is supposed to be empty.

This alone should be a clear reason not to use an object for an arbitrary-keyed hashmap, as it can lead to some really hairy bugs you’ll only discover later.

Speaking of strange ways that JavaScript objects treat keys, iterating through objects is riddled with gotchas.

For instance, you may already know not to do this:

for (const key in myObject) {

// 🚩 You may stumble upon some inherited keys you didn't mean to

}And you may have been told instead to do this:

for (const key in myObject) {

if (myObject.hasOwnProperty(key)) {

// 🚩

}

}But this is still problematic, as myObject.hasOwnProperty can easily be overridden with any other value. Nothing is preventing anyone from doing myObject.hasOwnProperty = () => explode().

So instead you should really do this funky mess:

for (const key in myObject) {

if (Object.prototype.hasOwnProperty.call(myObject, key) {

// 😕

}

}Or if you prefer your code to not look like a mess, you can use the more recently added Object.hasOwn:

for (const key in myObject) {

if (Object.hasOwn(myObject, key) {

// 😐

}

}Or you can give up on a for loop entirely and just use Object.keys with forEach.

Object.keys(myObject).forEach(key => {

// 😬

})However, with maps, there are no such issues at all. You can use a standard for loop, with a standard iterator, and a really nice destructuring pattern to get both the key and value at once:

for (const [key, value] of myMap) {

// 😍

}Me gusta.

In fact, this is so nice, we now have an Object.entries method to do similar with objects. It's one additional step so doesn't feel quite so first-class, but hey it works.

for (const [key, value] of Object.entries(myObject)) {

// 🙂

}Add that one to the long list of "loops in objects are ugly so choose one of the following 5 options you prefer".

But for Maps, it's nice to know there is one simple and elegant way to iterate built in directly.

Additionally, you can iterate over just keys or values as well:

for (const value of myMap.values()) {

// 🙂

}

for (const key of myMap.keys()) {

// 🙂

}

The drag-and-drop headless CMS

Builder.io is a headless CMS that lets you drag and drop with your components.

// Dynamically render your components

export function MyPage({ json }) {

return <BuilderComponent content={json} />

}

registerComponents([MyHero, MyProducts])

Visually build with your components

Builder.io is a headless CMS that lets you drag and drop with your components.

// Dynamically render your components

export function MyPage({ json }) {

return <BuilderComponent content={json} />

}

registerComponents([MyHero, MyProducts])

One additional perk of maps is they preserve the order of their keys. This has been a long asked for quality of objects, and now exists for maps.

This gives us another very cool feature, which is that we can destructure keys directly from a map, in their exact order:

const [[firstKey, firstValue]] = myMapThis can also open up some interesting use cases, like trivially implementing an O(1) LRU Cache:

Now you might say, oh, well, objects have some advantages, like they're very easy to copy, for instance, using an object spread or assign.

const copied = {...myObject}

const copied = Object.assign({}, myObject)But it turns out that maps are just as easy to copy:

const copied = new Map(myMap)The reason this works is because the constructor of Map takes an iterable of [key, value] tuples. And conveniently, maps are iterable, producing tuples of their keys and values. Nice.

Similarly, you can also do deep copies of maps, just like you can with objects, using structuredClone:

const deepCopy = structuredClone(myMap)Converting maps to objects is readily done using Object.fromEntries:

const myObj = Object.fromEntries(myMap)And going the other way is straightforward as well, using Object.entries:

const myMap = new Map(Object.entries(myObj))Easy!

And, now that we know this, we no longer have to construct maps using tuples:

const myMap = new Map([['key', 'value'], ['keyTwo', 'valueTwo']])You can instead construct them like objects, which to me is a bit nicer on the eyes:

const myMap = new Map(Object.entries({

key: 'value',

keyTwo: 'valueTwo',

}))Or you could make a handy little helper too:

const makeMap = (obj) => new Map(Object.entries(obj))

const myMap = makeMap({ key: 'value' })Or with TypeScript:

const makeMap = <V = unknown>(obj: Record<string, V>) =>

new Map<string, V>(Object.entries(obj))

const myMap = makeMap({ key: 'value' })

// => Map<string, string>I’m a fan of that.

Maps are not just a more ergonomic and better-performing way to handle key value maps in JavaScript. They can even do things that you just cannot accomplish at all with plain objects.

For instance, maps are not limited to only having strings as keys — you can use any type of object as a key for a map. And I mean, like, anything.

myMap.set({}, value)

myMap.set([], value)

myMap.set(document.body, value)

myMap.set(function() {}, value)

myMap.set(myDog, value)But, why?

One helpful use case for this is associating metadata with an object without having to modify that object directly.

const metadata = new Map()

metadata.set(myDomNode, {

internalId: '...'

})

metadata.get(myDomNode)

// => { internalId: '...' }This can be useful, for instance, when you want to associate temporary state to objects you read and write from a database. You can add as much temporary data associated directly with the object reference, without risk.

const metadata = new Map()

metadata.set(myTodo, {

focused: true

})

metadata.get(myTodo)

// => { focused: true }Now when we save myTodo back to the database, only the values we want saved are there, and our temporary state (which is in a separate map) does not get included accidentally.

This does have one issue though.

Normally, the garbage collector would collect this object and remove it from memory. However, because our map is holding a reference, it'll never be garbage collected, causing a memory leak.

Here’s where we can use the WeakMap type. Weak maps perfectly solve for the above memory leak as they hold a weak reference to the object.

So if all other references are removed, the object will automatically be garbage collected and removed from this weak map.

const metadata = new WeakMap()

// ✅ No memory leak, myTodo will be removed from the map

// automatically when there are no other references

metadata.set(myTodo, {

focused: true

})A few remaining useful things to know about Maps before we continue on:

map.clear() // Clear a map entirely

map.size // Get the size of the map

map.keys() // Iterator of all map keys

map.values() // Iterator of all map valuesOk, you get it, maps have nice methods. Moving on.

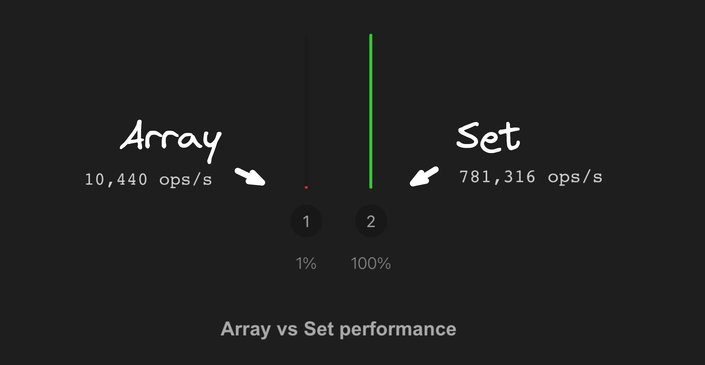

If we are talking about maps, we should also mention their cousin, Sets, which give us a better-performing way to create a unique list of elements where we can easily add, remove, and look up if a set contains an item:

const set = new Set([1, 2, 3])

set.add(3)

set.delete(4)

set.has(5)In some cases, sets can yield significantly better performance than the equivalent operations with an array.

Blah blah microbenchmarks are not perfect, test your own code under real-world conditions to verify you get a benefit, or don't just take my word for it.

Similarly, we get a WeakSet class in JavaScript that will help us avoid memory leaks as well.

// No memory leaks here, captain 🫡

const checkedTodos = new WeakSet([todo1, todo2, todo3])Now you might say there's one last advantage that plain objects and arrays have over maps and sets — serialization.

Ha! You thought you got me on that one. But I’ve got answers for you, friend.

So, yes, JSON.stringify()/ JSON.parse() support for objects and maps is extremely handy.

But, have you ever noticed that when you want to pretty print JSON you always have to add a null as the second argument? Do you know what that parameter even does?

JSON.stringify(obj, null, 2)

// ^^^^ what dis doAs it turns out, that parameter can be very helpful to us. It is called a replacer and it allows us to define how any custom type should be serialized.

We can use this to easily convert maps and sets to objects and arrays for serialization:

JSON.stringify(obj, (key, value) => {

// Convert maps to plain objects

if (value instanceof Map) {

return Object.fromEntries(value)

}

// Convert sets to arrays

if (value instanceof Set) {

return Array.from(value)

}

return value

})Why did the JavaScript developer quit their job? They didn’t get arrays. Ha ha ho ho. Ok.

Now we can just abstract this into a basic reusable function, and serialize away.

const test = { set: new Set([1, 2, 3]), map: new Map([["key", "value"]]) }

JSON.stringify(test, replacer)

// => { set: [1, 2, 3], map: { key: value } }For converting back, we can use the same trick with JSON.parse(), but doing the opposite, by using its reviver parameter, to convert arrays back to Sets and objects back to maps when parsing:

JSON.parse(string, (key, value) => {

if (Array.isArray(value)) {

return new Set(value)

}

if (value && typeof value === 'object') {

return new Map(Object.entries(value))

}

return value

})Also note that both replacers and revivers work recursively, so they are able to serialize and deserialize maps and sets anywhere in our JSON tree.

But, there is just one small problem with our above serialization implementation.

We currently don’t differentiate a plain object or array versus a map or a set at parse time, so we cannot intermix plain objects and maps in our JSON or we will end up with this:

const obj = { hello: 'world' }

const str = JSON.stringify(obj, replacer)

const parsed = JSON.parse(obj, reviver)

// Map<string, string>We can solve this by creating a special property; for example, called __type, to denote when something should be a map or a set as opposed to a plain object or array, like so:

function replacer(key, value) {

if (value instanceof Map) {

return { __type: 'Map', value: Object.fromEntries(value) }

}

if (value instanceof Set) {

return { __type: 'Set', value: Array.from(value) }

}

return value

}

function reviver(key, value) {

if (value?.__type === 'Set') {

return new Set(value.value)

}

if (value?.__type === 'Map') {

return new Map(Object.entries(value.value))

}

return value

}

const obj = { set: new Set([1, 2]), map: new Map([['key', 'value']]) }

const str = JSON.stringify(obj, replacer)

const newObj = JSON.parse(str, reviver)

// { set: new Set([1, 2]), map: new Map([['key', 'value']]) }Now we have full JSON serialization and deserialization support for sets and maps. Neat.

For structured objects that have a well-defined set of keys — such as if every event should have a title and a date — you generally want an object.

// For structured objects, use Object

const event = {

title: 'Builder.io Conf',

date: new Date()

}They're very optimized for fast reads and writes when you have a fixed set of keys.

When you can have any number of keys, and you may need to add and remove keys frequently, consider using map for better performance and ergonomics.

// For dynamic hashmaps, use Map

const eventsMap = new Map()

eventsMap.set(event.id, event)

eventsMap.delete(event.id)When creating an array where the order of elements matter and you may intentionally want duplicates in the array, then a plain array is generally a great idea.

// For ordered lists, or those that may need duplicate items, use Array

const myArray = [1, 2, 3, 2, 1]But when you know you never want duplicates and the order of items doesn't matter, consider using a set.

// For unordered unique lists, use Set

const set = new Set([1, 2, 3])Tip: Visit our JavaScript hub to learn more.

Hi! I'm Steve, CEO of Builder.io.

We make a way to drag + drop with your components to create pages and other CMS content on your site or app, visually.

You may find it interesting or useful:

Additional Web Development Resources

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo