How does Dropbox make that effect where it looks like I am painting a McLaren as I drag my mouse over it?

They are using WebGL with no libraries or frameworks, just raw hand coded WebGL shaders.

A lot of people hear WebGL and think 3D and heavy with big slow libraries, but it does not have to be that way.

WebGL shaders use a small specialized language that can seem scary at first, but they are not.

WebGL itself isn’t slow. Using large complex models like this can be.

Let’s learn the basics so you can make cool stuff by hand or with AI like I did in Builder.io, then use it for effects like this.

What's really cool about WebGL is it gives you per pixel precision. Fragment shaders run super fast concurrently on your GPU per pixel.

This gives us a level of per-pixel control we can’t do any other way on the web, to let us do all kinds of cool things:

Playing with WebGL effects through prompting in Builder.io.

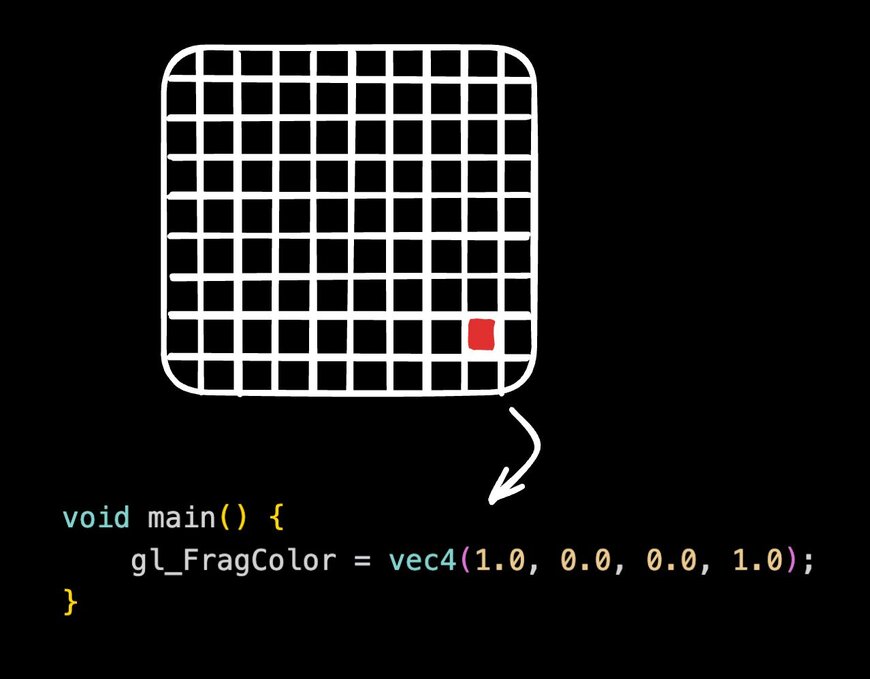

A fragment shader runs once per pixel and returns the color for that pixel. The absolute smallest version just hard codes a color. That is it.

precision mediump float;

void main() {

gl_FragColor = vec4(0.0, 1.0, 0.0, 1.0); // solid green

}Change the numbers to make it blue or red. You now have a working shader.

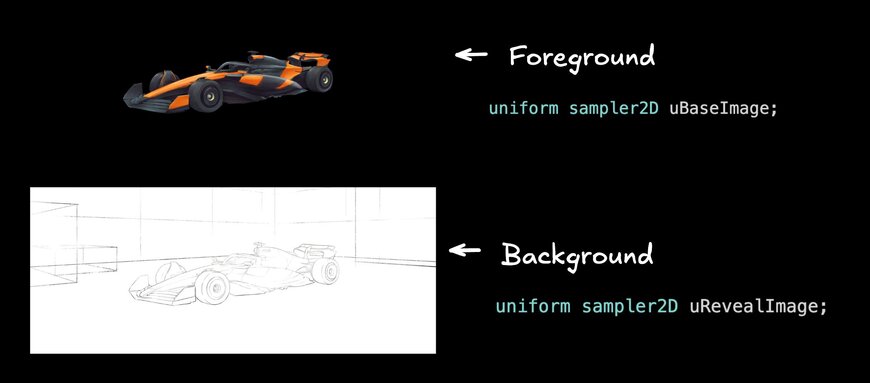

We have two images: one is the outline or unpainted car, the other is the painted version.

The fragment shader chooses per pixel which one to show. Near the cursor we reveal paint, away from the cursor we show the outline. That is the entire trick.

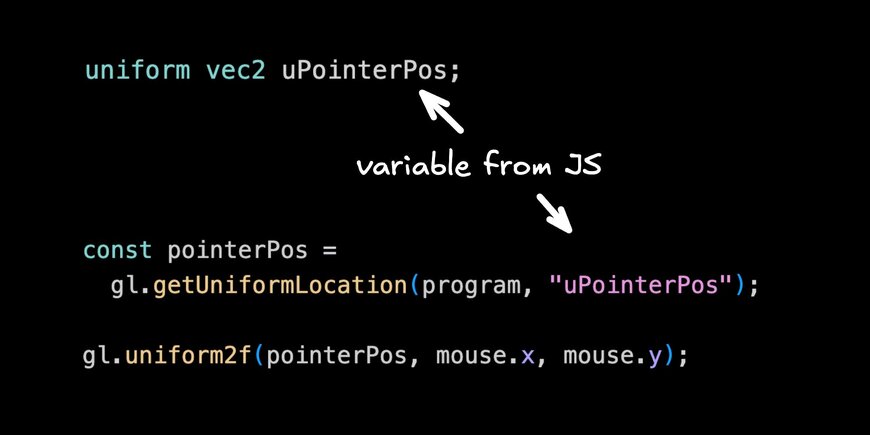

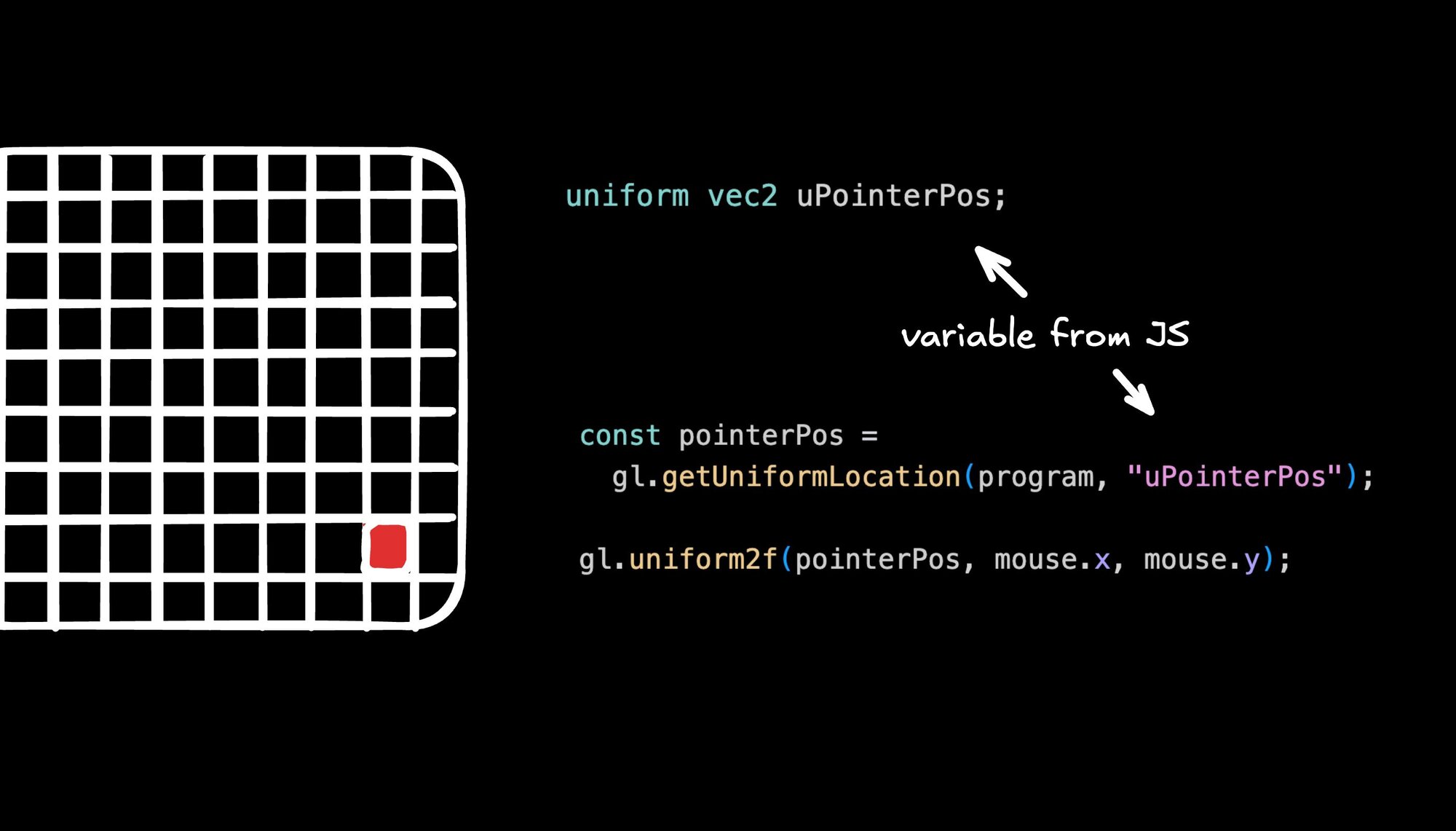

To do that we pass a few values from JavaScript to the shader as uniforms:

uBaseImagethe outline imageuRevealImagethe painted imageuPointerPosthe mouse position in pixelsuViewportthe canvas size

Inside the shader we compute a simple circular mask around the mouse and use it to mix between the two images.

Start with a soft circle so the edge looks smooth. Later you can add a tiny bit of noise if you want texture.

precision mediump float;

uniform vec2 uViewport; // canvas width, height (px)

uniform vec2 uPointerPos; // mouse x, y (px)

uniform sampler2D uBaseImage; // outline image

uniform sampler2D uRevealImage; // painted image

void main() {

// 0..1 coords for this pixel and the mouse

vec2 uv = gl_FragCoord.xy / uViewport;

vec2 mouse = uPointerPos / uViewport;

// soft circular mask around the cursor

float d = distance(uv, mouse);

float radius = 0.10;

float feather = 0.08;

float mask = smoothstep(radius, radius - feather, d);

// sample both images and blend by mask

vec4 baseCol = texture2D(uBaseImage, uv);

vec4 paintCol = texture2D(uRevealImage, uv);

gl_FragColor = mix(baseCol, paintCol, mask);

}You can stop here. It already feels good.

You only need the essentials. Create a canvas and a webgl context, compile the tiny vertex shader and the fragment shader above, draw a full screen rectangle, load two textures, and update uniforms each frame.

canvas.addEventListener("mousemove", (e) => {

const r = canvas.getBoundingClientRect();

mouse.x = e.clientX - r.left;

mouse.y = e.clientY - r.top;

});

// each frame

gl.uniform2f(uViewport, canvas.width, canvas.height);

gl.uniform2f(uPointerPos, mouse.x, canvas.height - mouse.y); // flip Y

gl.uniform1i(uBaseImage, 0); // TEXTURE0

gl.uniform1i(uRevealImage, 1); // TEXTURE1

gl.drawArrays(gl.TRIANGLES, 0, 6);

No framework. One draw call. The shader does the work.

Fragment shaders give per pixel control and run in parallel on the GPU, which makes this kind of effect fast. The code stays small because you are only writing the pieces you need.

I described the effect in plain language, listed the uniforms, and let Builder.io scaffold the shader and the minimal WebGL setup.

I uploaded those two images (get them here and here) and described the effect:

Use webgl to layer these images on top of each other.

Default to the outline image, and when I drag my mouse over reveal the image beneath like a spotlight.

Make the spotlight about 200px by 200px, with a noisy texture so it looks as if I am painting.I tweaked a few values in code, kept it dependency free so performance stayed high, and sent a pull request when it felt right.

The cool part about using Builder.io for this or other effects is you can apply them to your real production code (your actual existing sites or apps).

Once you are happy with your enhancements, you can send a pull request to engineering to review, and get your handy work live to real users.

If you want a bit more realism later, add additional noise to the mask for a rougher edge or accumulate the reveal into a texture so paint stays after the cursor passes. You can prompt an agent to add those once the basic version is working.

That is it. Start with the hard coded color so the shader feels concrete. Swap in the two images. Add the simple circle. Ship. Then layer texture if you want.

Here’s the full code I landed with:

#ifdef GL_FRAGMENT_PRECISION_HIGH

precision highp float;

#else

precision mediump float;

#endif

uniform vec2 uViewport;

uniform vec2 uPointerPos;

uniform float uElapsedTime;

uniform sampler2D uBaseImage;

uniform sampler2D uRevealImage;

uniform vec2 uImageDimensions;

// Hash function to generate pseudo-random values from 3D coordinates

float hash3D(vec3 p) {

p = fract(p * vec3(0.1031, 0.1030, 0.0973));

p += dot(p, p.zyx + 19.19);

return fract((p.x + p.y) * p.z);

}

// 3D value noise using trilinear interpolation

float valueNoise3D(vec3 pos) {

vec3 cellCorner = floor(pos);

vec3 localPos = fract(pos);

// Sample noise at 8 cube corners

float n000 = hash3D(cellCorner + vec3(0.0, 0.0, 0.0));

float n100 = hash3D(cellCorner + vec3(1.0, 0.0, 0.0));

float n010 = hash3D(cellCorner + vec3(0.0, 1.0, 0.0));

float n110 = hash3D(cellCorner + vec3(1.0, 1.0, 0.0));

float n001 = hash3D(cellCorner + vec3(0.0, 0.0, 1.0));

float n101 = hash3D(cellCorner + vec3(1.0, 0.0, 1.0));

float n011 = hash3D(cellCorner + vec3(0.0, 1.0, 1.0));

float n111 = hash3D(cellCorner + vec3(1.0, 1.0, 1.0));

// Smooth interpolation weights

vec3 blend = localPos * localPos * (3.0 - 2.0 * localPos);

// Trilinear interpolation

return mix(

mix(mix(n000, n100, blend.x), mix(n010, n110, blend.x), blend.y),

mix(mix(n001, n101, blend.x), mix(n011, n111, blend.x), blend.y),

blend.z

);

}

// Fractal Brownian Motion - layered noise for detail

float fbm(vec3 coord, float frequency, float persistence) {

float total = 0.0;

float amplitude = 1.0;

float maxValue = 0.0;

for (int octave = 0; octave < 3; octave++) {

total += valueNoise3D(coord * frequency) * amplitude;

maxValue += amplitude;

frequency *= 2.0;

amplitude *= persistence;

}

return total / maxValue;

}

// Calculate UV coordinates with aspect-ratio-preserving cover behavior

vec2 getCoverUV(vec2 screenUV, vec2 canvasSize, vec2 contentSize) {

float canvasRatio = canvasSize.x / canvasSize.y;

float contentRatio = contentSize.x / contentSize.y;

vec2 scaleFactor;

if (canvasRatio > contentRatio) {

scaleFactor = vec2(1.0, contentRatio / canvasRatio);

} else {

scaleFactor = vec2(canvasRatio / contentRatio, 1.0);

}

// Zoom in 20% (divide by 1.2 to make image appear larger)

scaleFactor /= 1.2;

vec2 adjustedUV = (screenUV - 0.5) * scaleFactor + 0.5;

// Shift up 10% for vertical centering

adjustedUV.y -= 0.1;

adjustedUV.y = 1.0 - adjustedUV.y;

return adjustedUV;

}

// Create circular spotlight gradient with smooth falloff

float createSpotlight(vec2 screenPos, vec2 center, float radius, float softness) {

float distFromCenter = distance(screenPos, center);

float gradient = 1.0 - ((distFromCenter - radius) / softness);

return gradient;

}

void main() {

vec2 screenUV = gl_FragCoord.xy / uViewport;

vec2 mouseNormalized = uPointerPos / uViewport;

// Get texture coordinates with proper aspect ratio handling

vec2 textureUV = getCoverUV(screenUV, uViewport, uImageDimensions);

// Sample both image states

vec4 defaultImage = texture2D(uBaseImage, textureUV);

vec4 revealedImage = texture2D(uRevealImage, textureUV);

// Setup animated noise coordinates

vec3 noiseCoord = vec3(screenUV, uElapsedTime / 200.0);

// Correct UV for aspect ratio to make spotlight circular

float aspectRatio = uViewport.x / uViewport.y;

vec2 correctedUV = screenUV;

vec2 correctedMouse = mouseNormalized;

correctedUV.x *= aspectRatio;

correctedMouse.x *= aspectRatio;

// Calculate spotlight influence around mouse

float spotlightCore = 0.0135;

float spotlightFalloff = 0.216;

float spotlightGradient = createSpotlight(correctedUV, correctedMouse, spotlightCore, spotlightFalloff);

// Generate procedural noise only within spotlight area (optimization)

float turbulence = 0.0;

if (spotlightGradient > 0.0) {

turbulence = fbm(noiseCoord, 10.0, 0.6);

}

// Build reveal mask using multiple factors

float revealMask = spotlightGradient;

// Use image color channels as shape mask

float shapeMask = revealedImage.g * (0.4 * revealedImage.b + 0.6) * 2.0;

revealMask *= shapeMask;

// Add base spotlight contribution

revealMask += spotlightGradient / 2.5;

// Apply turbulent noise to create rough edges

revealMask *= max(0.0, (turbulence - 0.1) * 4.0);

// Boost center of spotlight for solid core

revealMask += max(0.0, (spotlightGradient - 0.3) * 0.7);

// Apply hard threshold and alpha masking

float revealThreshold = 0.5;

float finalReveal = (revealMask > revealThreshold) ? 1.0 : 0.0;

finalReveal *= revealedImage.a;

// Blend between default and revealed images

gl_FragColor = mix(defaultImage, revealedImage, finalReveal);

}let animationFrameId = null;

let resizeHandler = null;

function createShader(gl, type, source) {

const shader = gl.createShader(type);

if (!shader) return null;

gl.shaderSource(shader, source);

gl.compileShader(shader);

if (!gl.getShaderParameter(shader, gl.COMPILE_STATUS)) {

console.error("Shader compile error:", gl.getShaderInfoLog(shader));

gl.deleteShader(shader);

return null;

}

return shader;

}

function createProgram(gl, vertexShader, fragmentShader) {

const program = gl.createProgram();

if (!program) return null;

gl.attachShader(program, vertexShader);

gl.attachShader(program, fragmentShader);

gl.linkProgram(program);

if (!gl.getProgramParameter(program, gl.LINK_STATUS)) {

console.error("Program link error:", gl.getProgramInfoLog(program));

gl.deleteProgram(program);

return null;

}

return program;

}

function loadTexture(gl, url) {

return new Promise((resolve) => {

const texture = gl.createTexture();

const image = new Image();

image.crossOrigin = "anonymous";

image.onload = () => {

gl.bindTexture(gl.TEXTURE_2D, texture);

gl.pixelStorei(gl.UNPACK_FLIP_Y_WEBGL, false);

gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGBA, gl.RGBA, gl.UNSIGNED_BYTE, image);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR);

resolve({ texture, width: image.width, height: image.height });

};

image.onerror = () => {

console.error("Failed to load texture:", url);

resolve(null);

};

image.src = url;

});

}

export async function initWebGL(canvas, vertexShaderSource, fragmentShaderSource) {

if (animationFrameId) {

cancelAnimationFrame(animationFrameId);

}

if (resizeHandler) {

window.removeEventListener("resize", resizeHandler);

}

const gl = canvas.getContext("webgl") || canvas.getContext("experimental-webgl");

if (!gl) {

console.error("WebGL not supported");

return;

}

const vertexShader = createShader(gl, gl.VERTEX_SHADER, vertexShaderSource);

const fragmentShader = createShader(gl, gl.FRAGMENT_SHADER, fragmentShaderSource);

if (!vertexShader || !fragmentShader) {

console.error("Failed to create shaders");

return;

}

const program = createProgram(gl, vertexShader, fragmentShader);

if (!program) {

console.error("Failed to create program");

return;

}

const idleTextureData = await loadTexture(gl, "https://cdn.builder.io/api/v1/image/assets%2FYJIGb4i01jvw0SRdL5Bt%2Fb525042cc4df45a580db530646439113");

const activeTextureData = await loadTexture(gl, "https://cdn.builder.io/api/v1/image/assets%2FYJIGb4i01jvw0SRdL5Bt%2F0a3f6536eea3404fa673ca8806e36d85");

if (!idleTextureData || !activeTextureData) {

console.error("Failed to load textures");

return;

}

const idleTexture = idleTextureData.texture;

const activeTexture = activeTextureData.texture;

const positionBuffer = gl.createBuffer();

gl.bindBuffer(gl.ARRAY_BUFFER, positionBuffer);

const positions = new Float32Array([-1, -1, 1, -1, -1, 1, 1, 1]);

gl.bufferData(gl.ARRAY_BUFFER, positions, gl.STATIC_DRAW);

const positionLocation = gl.getAttribLocation(program, "a_position");

gl.enableVertexAttribArray(positionLocation);

gl.vertexAttribPointer(positionLocation, 2, gl.FLOAT, false, 0, 0);

const mouse = { x: 0, y: 0 };

const startTime = Date.now();

const imageSize = { width: idleTextureData.width, height: idleTextureData.height };

function resize() {

const width = canvas.parentElement.clientWidth;

const height = canvas.parentElement.clientHeight;

const dpr = window.devicePixelRatio || 1;

canvas.width = Math.floor(width * dpr);

canvas.height = Math.floor(height * dpr);

canvas.style.width = width + 'px';

canvas.style.height = height + 'px';

gl.viewport(0, 0, canvas.width, canvas.height);

}

resizeHandler = resize;

window.addEventListener("resize", resize, false);

resize();

const handleMouseMove = (e) => {

const rect = canvas.getBoundingClientRect();

mouse.x = e.clientX - rect.left;

mouse.y = e.clientY - rect.top;

};

canvas.addEventListener("mousemove", handleMouseMove);

function render() {

animationFrameId = requestAnimationFrame(render);

gl.clearColor(0, 0, 0, 1);

gl.clear(gl.COLOR_BUFFER_BIT);

gl.useProgram(program);

const viewportLocation = gl.getUniformLocation(program, "uViewport");

const pointerPosLocation = gl.getUniformLocation(program, "uPointerPos");

const elapsedTimeLocation = gl.getUniformLocation(program, "uElapsedTime");

const baseImageLocation = gl.getUniformLocation(program, "uBaseImage");

const revealImageLocation = gl.getUniformLocation(program, "uRevealImage");

const imageDimensionsLocation = gl.getUniformLocation(program, "uImageDimensions");

const dpr = window.devicePixelRatio || 1;

gl.uniform2f(viewportLocation, canvas.width, canvas.height);

gl.uniform2f(pointerPosLocation, mouse.x * dpr, (canvas.clientHeight - mouse.y) * dpr);

gl.uniform1f(elapsedTimeLocation, (Date.now() - startTime) / 1000);

gl.uniform2f(imageDimensionsLocation, imageSize.width, imageSize.height);

gl.activeTexture(gl.TEXTURE0);

gl.bindTexture(gl.TEXTURE_2D, idleTexture);

gl.uniform1i(baseImageLocation, 0);

gl.activeTexture(gl.TEXTURE1);

gl.bindTexture(gl.TEXTURE_2D, activeTexture);

gl.uniform1i(revealImageLocation, 1);

gl.drawArrays(gl.TRIANGLE_STRIP, 0, 4);

}

render();

return { gl };

}Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo