Today, websites are more interactive and useful than ever, which also means they’re more complex than ever. Users have come to expect the new interactivity and usefulness, and they’re not going to want to give it up. All of this means more JavaScript is being sent to the browser on page load than ever, which isn’t likely to slow down anytime soon. We can expect that sites will continue to keep getting better and more complex, but what should we do about JavaScript?

How did we get here?

It’s well understood that the amount of JavaScript being sent to browsers is steadily increasing, as shown in this graph from httparchive.org.

Some people like to argue that the site we served ten years ago and the one we serve today are the same. But it’s not a fair comparison. The sites of today are way more complex.

And while some of the complexity comes from better interactions and functionality for the end-users, a lot of it comes from helping us gain insight into our users' behavior, which helps us build even better sites. Our sites ultimately get bigger because they do more stuff not only for our end-users, but for us as well.

CPUs have stalled.

Every year, the CPUs are getting faster, but most of the speedup comes from improved parallelism. And in recent years the performance improvements per core have been slowing down. We’re approaching the limits of how fast CPU clocks can run and how much cleverness the CPUs can do per clock cycle. As a result, we see more multi-core CPUs pop up (It is not uncommon for your phone to have 4 CPU cores and 4 GPU cores). So the main driver of CPU performance improvement these days comes from parallelism of multiple cores, not from improvements in a single core.

So, why are we talking about CPU speed and cores? As our sites get bigger and download more JavaScript, the CPU has more work to do. And because JavaScript is single-threaded, all of the additional work falls onto a single core. Therefore, this is not a problem that will get better by waiting a few years and expecting the hardware to catch up.

Ultimately, our current JS load is increasing faster than the rate of single CPU core performance. This is not sustainable.

Frameworks were not designed for this.

Frameworks for desktop applications have existed way before the web. So when the web came, we just took our desktop framework philosophy of what the framework is and applied it to the web. Desktop frameworks can safely assume that all of the code is already available and that there is no server. The problem is that those two key points are not true for the web. We can't assume that the code is already available, and server pre-rendering has become an important part of our vocabulary.

We’re at a stage where the frameworks we have are pretending they’re desktop frameworks. They have not embraced SSR and lazy-loading to the core.

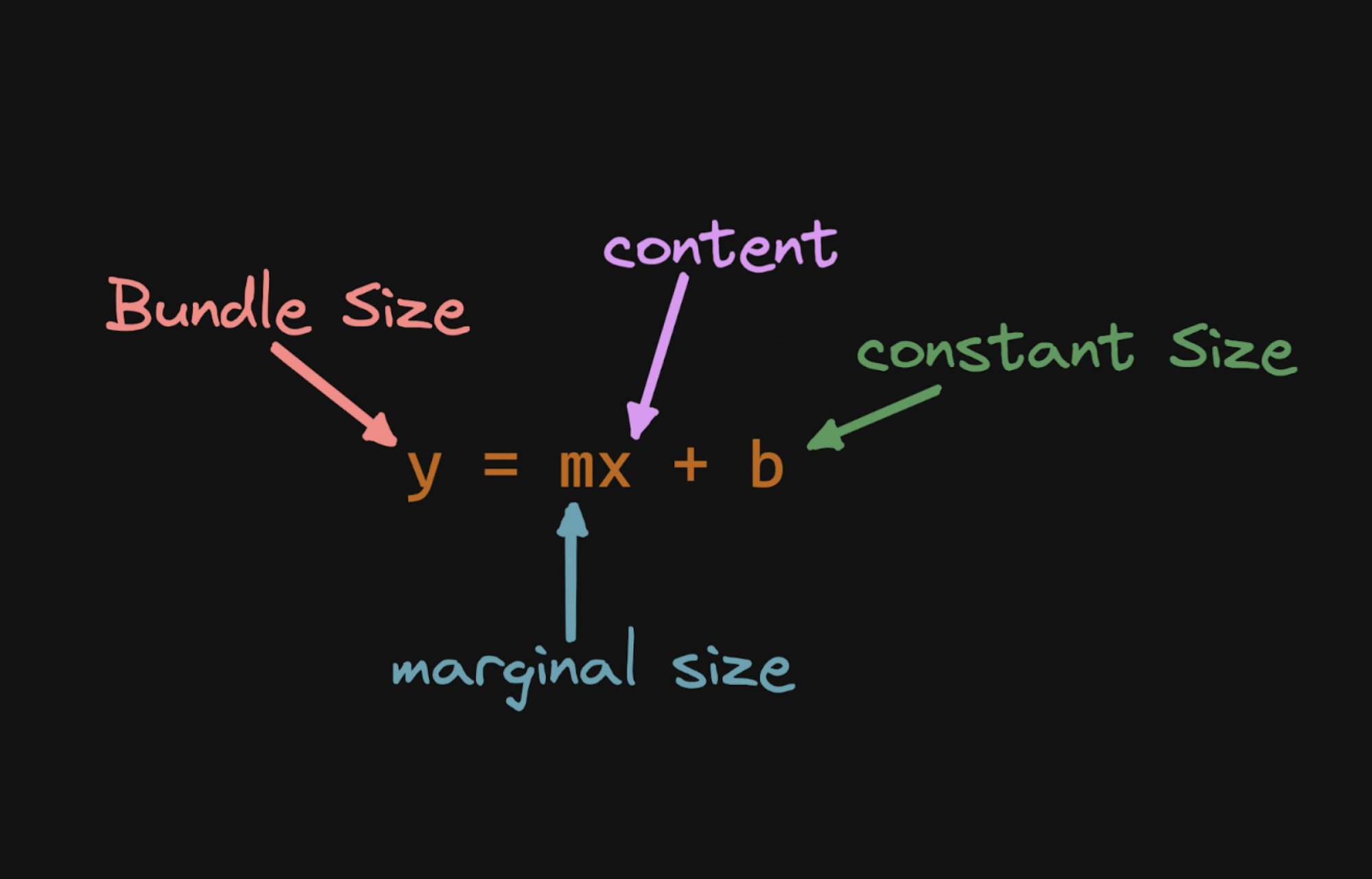

y=mx+b

An application needs to boot up on the client. The bootup consists of a fixed cost of the framework and a variable cost of the application itself (the complexity of the application). So really, we are talking about a linear relationship which can be expressed as “y=mx+b”.

When frameworks argue who is smaller, they can really only compete on two points: the fixed cost of the framework or the variable cost of the application. So either a smaller “m” or a smaller “b”.

Ryan Carniato did a nice comparison of different frameworks in his post JavaScript Framework TodoMVC Size Comparison (table replicated below).

For the sake of my argument, the exact numbers aren’t important, but the table does a good job of illustrating how, as the application size increases, different frameworks have different slopes (“m”) and different initial values (“b”).

What we need is a new paradigm. We need a framework with a constant load time no matter the application complexity. At first glance, this may sound impossible—surely, the initial bundle is proportional to the application complexity. But what if we lazyload code rather than doing it eagerly? I covered why existing frameworks need to eagerly download code in my post, Hydration is pure overhead. Here’s what we’re looking for instead:

The current status quo is to download and execute all of the code at once. What’s needed is a way to sip on the JavaScript as the user is interacting with the site. No interaction, no JavaScript; little interaction, little JavaScript. If there’s a lot of interaction, we approach the classical frameworks and download most of the code. But the big deal is that we need to shift our mental model from eagerly downloading code at the beginning to lazily downloading code as the user interacts.

Current frameworks already know how to do this to some extent, as they all know how to download more code on route change. What’s needed is to extend that paradigm to the interaction level.

In the above image the site is delivered as HTML to the client. Notice that none of the components have the coresponded code loaded. The next image shows which components need to be downloaded and executed on user interectaion. The result is that a lot less code needs to be downloaded and the code which is downloaded is executed later. This offloads the amount of work the CPU has to do on site startup.

Summary

The amount of JavaScript we download to the browser is going up every year. The problem is that our site's complexity increases as we deliver more complex end-user experiences that require more code. We have already reached a point where we have too much code in the initial download, and our site’s startup performance suffers. This is because the frameworks we use are O(n). This is not scalable.

While different frameworks have different slopes in the end, any slope is too much. Instead, we need to lazy load on interaction rather than on initial load. Frameworks already know how to lazy load on route change, we just need to go deeper and do it on interaction as well. This is needed so that our initial load size can be O(1) and we can lazy load code on as needed basis. It’s the only way we can continue to build even more complex web applications in the future.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo