AI agents are brilliant engines spinning in neutral.

They can write complex Python, but they can't run it on your machine. They can discuss UI patterns, but they can't see your Figma files.

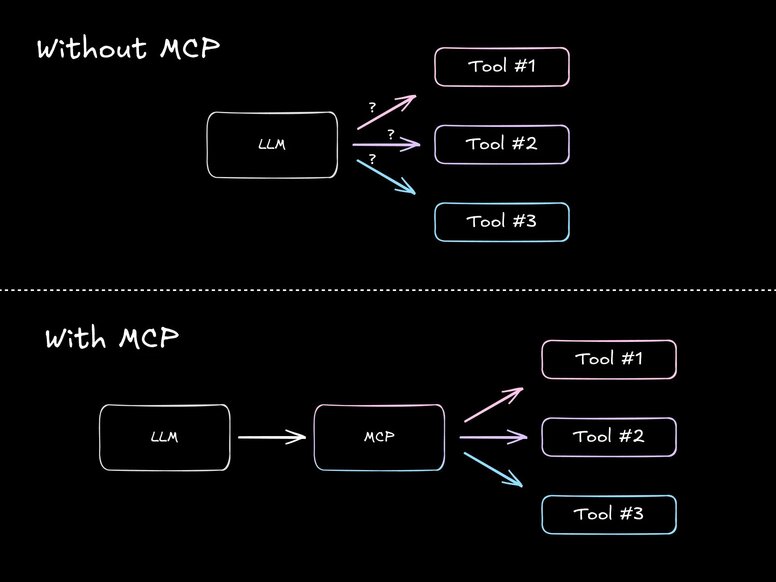

The Model Context Protocol (MCP) solves this disconnect, replacing brittle API integrations with a universal standard for plugging tools into LLMs.

Now, the challenge is choosing the right servers to power it. Below, I've curated a complete list of my personal favorites as a full-stack web developer.

If you haven't read our technical deep dive, here’s the tl;dr: MCP is an open standard that decouples the intelligence (the LLM) from the capabilities (the tools).

Before MCP, connecting an AI to a Postgres database required custom API wrappers. You had to manually define schemas, handle authentication, and update your code every time the model provider tweaked their tool-calling syntax. It was a maintenance nightmare that locked you into a single provider.

MCP introduces a universal interface. You spin up a "Postgres MCP Server" once. That server can now talk to Claude Code, Cursor, Fusion, or any other compliant client.

This standardization has shifted the ecosystem from experimental scripts to robust infrastructure. The servers listed below are production-ready tools that give your agents structured access to the real world.

Connecting MCP servers to your files, databases, or Stripe account is closer to wiring up a microservice than adding a new npm package.

- Start with read‑only servers (docs, search, observability).

- Scope each server to a narrow blast radius (per‑project keys, limited directories, dev/test data).

- Log who called what so you can see how agents are actually using your tools.

At the end of the post, we’ll dive deeper into best practices for running MCP servers safely in production.

Before diving into the specific tools, you should know where the ecosystem lives. These are the best places to discover new and updated servers:

- Official repository (GitHub): The source of truth for reference implementations.

- Awesome MCP servers: A curated community list.

- Glama.ai: A comprehensive marketplace with visual previews.

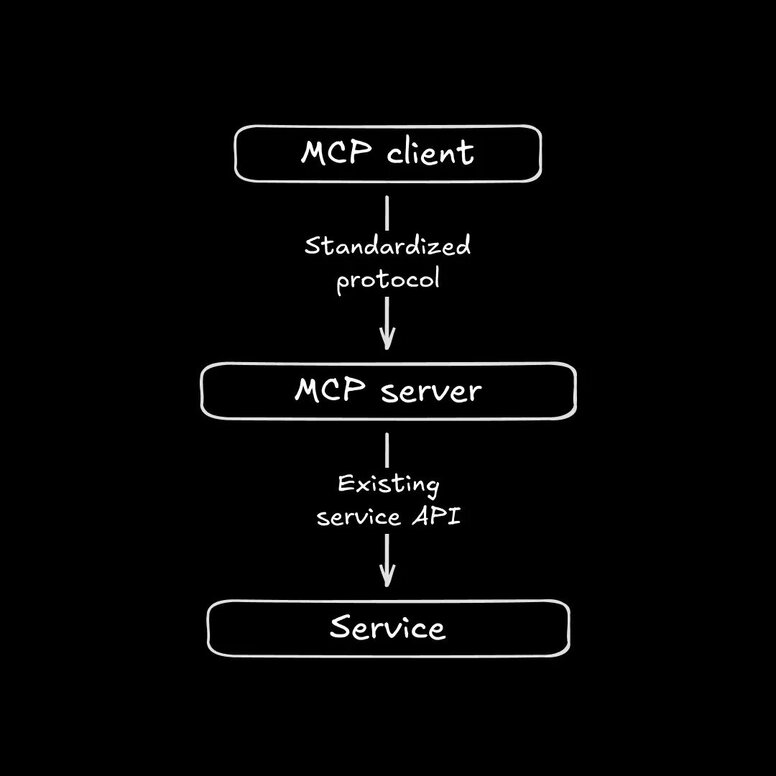

Keep in mind, when looking at vendor-specific tools, that official implementations are best, given that an MCP server is basically just a way for an AI to access existing service APIs:

As such, expect MCP servers from official providers to require subscriptions or regular API usage costs.

Now, let’s dive into the tools. We’ve divided them up into some developer-friendly categories: Learn, Create, Build, Data, Test, Deploy, Run, Work, Automate, Brain, and Fun.

These MCP servers give your agent real-time documentation and external knowledge. Start here if you want your agent grounded in current docs and web content before it ever touches your codebase.

The most helpful LLM grounding for developers. Instead of relying on training data, the Context7 MCP fetches current docs through a documentation-as-context pipeline.

Ask your agent, "How do I use the latest feature of X library?" and watch it fetch the current docs instead of relying on outdated training data. Or, have it ground answers in the docs for the exact version you’re running.

LLMs have a knowledge cutoff. They need to know what happened today. An MCP server provides consistency across different agents and tools compared to built-in browsing.

- Brave Search MCP: Best for general queries and finding URLs.

- Fetch MCP: The "Good Ol' Server Fetch" option. Use it when you want raw HTML or Markdown, so the agent can parse a page itself—just avoid internal URLs you wouldn’t normally expose.

- Firecrawl MCP / Jina Reader MCP: Best for turning URLs into clean Markdown. They strip boilerplate, nav, and ads so the agent can focus on the actual article, though very interactive apps or paywalled content may still require a manual check.

- Perplexity MCP / Exa MCP: Best for semantic search and finding similar content. Reach for them when you care more about “find the 5 most relevant sources on this topic” than exhaustive web search, and remember they’re paid APIs so you may want to reserve them for deeper research.

GPT Researcher is a deep-research agent that plans, executes, and writes citation-backed reports across both web and local documents.

Its dedicated GPT Researcher MCP server exposes a "do deep research" tool over MCP, giving web developers rigorous answers to questions like "Should we migrate from REST to tRPC?" or "How do the top open-source web analytics stacks compare?" without hand-wiring dozens of search and scraping tools.

Kick off a job, wait a few minutes, and you’ll get a structured report with clickable sources instead of just trusting the model.

These MCP servers power visual interfaces and user experience.

Design-to-code is the holy grail of frontend development. Figma’s official Dev Mode MCP server exposes the live structure of the layer you have selected in Figma—hierarchy, auto‑layout, variants, text styles, and token references—over MCP so tools like Claude, Cursor, or Windsurf can generate code against the real design instead of screenshots.

For a full walkthrough, see our guide, Design to Code with the Figma MCP Server.

If Figma MCP helps your agent respect the design system, the Magic UI MCP helps it move fast within one.

It exposes the Magic UI React + Tailwind component library over MCP, so you can say things like "make a marquee of logos" or "add a blur fade text animation" and get back production-ready components with the right JSX and classes.

It's ideal for frontend devs who want to ship polished marketing sections, animated backgrounds, and device mockups without spending hours tweaking Tailwind utilities by hand.

If you'd rather have an all-in-one Create, Build, Test, and Deploy stack wired up for you, take a look at Builder Fusion.

Instead of juggling individual MCP configs, you get an AI-powered visual canvas that sits on top of your existing GitHub repo, design system, and APIs, so your whole team can prompt, tweak UI, and ship real pull requests against the actual codebase.

Because Fusion speaks MCP natively, you connect servers like Figma, Supabase, Netlify, Sentry, or Linear once at the workspace level and every agent can use them. You don't need to hand-edit JSON configs every time you spin up a new environment.

These MCP servers help you write, debug, and secure code.

These servers give your agent access to your local machine. Without them, the agent is trapped in the browser.

The official filesystem MCP is the secure default. It strictly limits access to allowed directories, making it best for safe code reading and basic edits.

A common use case is asking an agent to "read this repo and explain the architecture." Use it when you're drowning in copy/paste and just need the agent to see the code.

Desktop Commander is the "God Mode" alternative to the official filesystem MCP that adds full terminal access, process management (run servers, kill ports), and advanced ripgrep search.

Reach for it when you’re tired of restarting dev servers or copy-pasting failing test logs, but remember it can run arbitrary commands. Treat it like an interactive shell.

Another cool thing you can do with it? "Organize my downloads folder."

Running generated code locally is risky; running it in a sandbox is safe. The E2B MCP server brings "Code Interpreter" capabilities, giving any agent a secure cloud environment to run Python/JS, execute shell commands, or launch browsers.

This allows you to ask the agent to "write a script to analyze this CSV file and generate a chart" or "execute this code to verify it runs without errors."

It’s perfect when you want the agent to run migrations, data-cleanup jobs, or performance experiments without touching your laptop or production data.

These tools give your agent deep knowledge of your codebase and its history.

The Git MCP gives the agent a structured understanding of your local repo (branches, commits, diffs) without parsing raw shell output.

It fixes the usual “agent misunderstood my git status” problem and lets it safely script workflows like creating feature branches, reviewing diffs, and preparing commits without fragile shell parsing.

No agent is useful without reading the actual codebase. The GitHub MCP adds a "search code" capability that outperforms simple context dumping.

It shines when you’re constantly pasting GitHub links into chat. Have the agent search a monorepo for call sites, skim PRs, or summarize recent changes based on live repo data instead of whatever happened to be in its training set.

Critical for teams self-hosting or using GitLab's integrated DevOps platform, the GitLab MCP provides deep integration with GitLab's CI/CD pipelines and issue tracking, allowing agents to check build statuses or read merge requests.

It’s great for questions like "why did this MR’s pipeline fail?" or "what actually went out in the last deploy?"

Improve security coverage by letting the AI check your code as it writes it. The Semgrep MCP uses static analysis to find vulnerabilities, bugs, and enforce coding standards.

Have the agent run Semgrep on its own diffs or PRs, then tune the rule set before you gate CI on it.

These MCP servers handle storing, querying, and managing your core business data.

Agents need long-term memory and business data access. Just make sure you scope them to read-only until you really know what you're doing with LLMs + databases.

The ecosystem now covers most major databases:

- Prisma Postgres: Best for TypeScript teams. Built directly into the Prisma CLI (

npx prisma mcp), it allows agents to query data and manage schema migrations. - Supabase / PostgreSQL: Best for production relational data, fully aware of Row Level Security policies. Use it when the agent needs to see “real” app data without bypassing your auth model. (And speaking of, the Supabase MCP handles auth as well.)

- Convex: Best for full-stack feature implementation. It exposes backend functions and tables directly to the agent so you can say “add a notifications feed” and have it wire up both Convex functions and UI.

- MongoDB: Best for document data, offering schema inspection and JSON querying. It helps agents navigate messy, semi-structured collections when you’re debugging odd edge cases or backfilling new fields.

- SQLite: The reliable choice for local-first development. Let the agent experiment with schemas, prototype analytics, or build small internal tools here instead of pointing it at production.

- MindsDB MCP: Best when your app needs to reason over data spread across multiple databases, warehouses, and SaaS tools. It runs a federated query engine behind an MCP server, letting agents treat those sources as a single virtual database.

- AWS MCP Suite: Official servers for DynamoDB, Aurora, and Neptune. Ideal if your infra already lives on AWS and you want the agent to inspect tables, tweak configs, or run targeted queries without juggling bespoke SDK code.

The Stripe MCP gives agents "wallets" and the ability to manage revenue. It provides safe interaction with the Stripe API for customer management, payments, and subscriptions, allowing you to "check the subscription status of user X" or "create a payment link for this new product."

Location is a fundamental primitive of many web apps, from logistics to real estate. The Google Maps MCP gives agents "spatial awareness" and access to real-world point-of-interest data.

Use it to "calculate the delivery radius for this store" or "find top-rated restaurants near the user's coordinates."

These MCP servers help you validate code, debug runtime issues, and automate browser workflows.

Agents need to verify their work by actually running the app in a browser. The Playwright MCP allows agents to interact with web pages using structured accessibility trees instead of relying on slow, expensive screenshots.

You can ask it to "go to localhost:3000, log in as 'testuser', and verify the dashboard loads." It’s great for catching “looks fine in the DOM, but the UI is broken” issues.

"It works on my machine" isn't enough. Agents need to inspect the runtime environment. The Chrome DevTools MCP provides agents with direct access to the Console, Network tab, and Performance Profiler.

This allows you to "check why the LCP (Largest Contentful Paint) is slow on the homepage" or "capture the console errors when I click this button."

Your agent might build for Chrome, but your users are on Safari, Firefox, and mobile. The BrowserStack MCP lets the agent "rent" thousands of real devices in the cloud to verify its code.

A key feature is its ability to automatically scale test suites across different environments without any local setup. Use it when you need to confirm that a layout or interaction really works on a specific device/OS combo.

Just watch concurrency limits and test run time so you don’t burn through your BrowserStack minutes.

These MCP servers focus on shipping your app and keeping it healthy in the wild.

Agents don't stop at writing code. With these MCPs, they can ship it by taking your local project and deploying it to the world.

Best for frontend/JAMstack projects, the Netlify MCP allows agents to manage sites, build hooks, and environment variables.

Use it when you’re sick of bouncing between the Netlify UI and CLI.

Let the agent inspect failing build logs, tweak environment variables, or spin up preview deploys while you stay in the editor.

For Next.js and full-stack apps, the Vercel MCP handles deployment monitoring, project management, and infrastructure control.

Have the agent create new projects, adjust environment variables, or check production vs preview deployment health.

You need to give AI access to runtime data, not just static code. A common use case is when an agent sees a stack trace, pulls the live error from Sentry or Datadog, and proposes a fix.

The Sentry MCP is built for tracking real-time errors and performance issues directly in the editor.

Instead of screenshotting stack traces into chat, point the agent at a Sentry issue so it can pull the full context, correlate it with recent releases, and suggest fixes without you ever opening the Sentry UI.

For full-stack observability, the Datadog MCP allows agents to query metrics, logs, and traces to diagnose system-wide issues.

It’s ideal for “it’s slow in production” moments where you want the agent to slice through logs, latency graphs, and traces across services.

The Last9 MCP provides deep reliability engineering and service graphs.

A powerful use case is asking the agent to "check for performance regressions after the last deploy." The agent can correlate change events with live metrics, traces, and logs to identify if your recent code push caused a latency spike or error rate increase.

These MCP servers support communication, project management, and business workflows.

The Slack MCP allows agents to read channels, summarize threads, and post messages. It turns your chat history into an accessible knowledge base, letting you simply ask the agent to "catch me up on the #engineering-standup channel."

It’s perfect when you’re drowning in unread channels and meeting threads.

- Linear MCP: Ideal for high-velocity startups. It focuses on issues, cycles, and project updates.

- Jira MCP: Built for enterprise scale. It handles complex workflows and ticket states.

You can use either server to "create a ticket for this bug I just found" or ask "what's the status of the 'Login Refactor' project?"

Most company knowledge lives in wikis, not code comments.

- Notion MCP: Provides semantic search over your Notion workspace.

- Google Drive MCP: Accesses Docs, Sheets, and Slides.

This allows you to say "Find the product specs for the Q3 launch in Drive" and then ask the agent to help you scaffold the code based on those specs.

If you live in Obsidian instead of Notion, a handful of community Obsidian MCP servers (like obsidian-claude-code-mcp and obsidian-mcp-plugin) let agents read, search, and refactor your local vault.

They're great if your architecture notes, snippets, and design docs already live in Markdown, but the ecosystem is still early and fragmented, so treat Obsidian MCP as a power-user add-on rather than part of the core web-dev stack.

Task Master turns Product Requirements Documents (PRDs) into structured, prioritized, implementation-ready task lists your agent can read, create, and update as it codes (e.g., "implement task 5, then mark it complete") without custom API glue.

Use it when your backlog lives in Markdown and sticky notes instead of Jira or Linear; keep one source of truth for tasks (.taskmaster/ in the repo) so agents and humans don’t drift out of sync.

These MCP servers connect your tools and automate repetitive tasks.

The n8n MCP brings complex, low-code workflow automation to the agent. n8n now natively supports MCP, allowing agents to trigger multi-step workflows.

For example, you can ask the agent to "run the 'Qualify & Enrich' workflow" whenever a new lead comes in and return the result.

It’s best when you already have n8n flows gluing together CRMs, email, and internal APIs. Have the agent orchestrate those instead of hand-writing HTTP calls.

- Zapier MCP: Connects your agent to 5,000+ apps without needing custom API integrations.

- Pipedream MCP: A developer-centric option that allows agents to trigger serverless code (Node.js/Python) and event-driven workflows.

Reach for these when you want your agent to actually “do things” across SaaS, such as sending emails, updating CRM records, or syncing analytics—without writing all the glue code yourself.

These MCP servers enhance the agent's own memory, reasoning, and metacognition.

Most MCP servers give your agent new senses (files, Git, databases), whereas Sequential Thinking gives it a better mindset. The Sequential Thinking MCP externalizes reasoning as explicit steps and branches instead of a single opaque "answer."

Use it to break down big changes, like "migrate our auth flow to a new provider," into ordered phases (analyze, design, implement, test, roll out), explore alternative approaches, and revisit those plans later.

It doesn't talk to external APIs by itself; it shines as an optional "advanced mode" alongside Task Master, Git, or your observability MCPs when you want your AI pair programmer to think more like a senior engineer.

Most agents forget everything the moment you close the chat. Memory MCPs solve this by giving your agent a persistent brain.

Instead of just retrieving text matches, these tools build structured understanding, allowing the agent to remember relationships between people, code, and concepts over time.

- Knowledge Graph Memory: Builds a dynamic graph of entities (People, Projects, Concepts) and their relationships.

- Cognee MCP: Graph-RAG for your agent. It ingests your documents and creates an interconnected knowledge graph, allowing the agent to find "hidden" connections between concepts (e.g., "How does the new billing policy affect the legacy API?").

- Vector Databases (Chroma, Weaviate, Milvus): RAG-as-a-Tool. This lets you "search" the vector DB for exactly the relevant paragraph instead of stuffing contexts.

Use graph-style memory when you care about relationships and long-lived knowledge (“who owns billing?”); use vector DB MCPs when you just need fast, relevant snippets from big document piles.

Finally, don’t forget to keep your brain happy with some generative playlisting:

- Spotify MCP turns your agent into a focused jukebox that can search, queue, and switch playlists directly from your editor.

- If you live in the YouTube ecosystem, YouTube Music MCP or LLM Jukebox do the same for YouTube/YouTube Music, handling track search and playback on your behalf.

"Installing" an MCP server just means telling your client where that server binary or URL lives:

- Cursor (fastest starting point if you’re already coding there): Open

Settings → Features → MCP, click Add MCP server, and point Cursor at the command (forstdioservers) or URL (for SSE/HTTP servers). For power users, the same information can live in~/.cursor/mcp.jsonunder anmcpServersblock. - Claude Desktop / Claude Code (one config for desktop + IDE): Create or edit

claude_desktop_config.json(for example on macOS:~/Library/Application Support/Claude/claude_desktop_config.json) and add entries undermcpServersthat specify the server command, args, and any env vars or API keys, then restart Claude so the tools show up in both Desktop and the Claude Code extension. - ChatGPT (OpenAI, best when you’re mostly in the browser): In ChatGPT, enable Developer mode, go to Settings → Connectors, and create a connector that points at your MCP server's HTTPS endpoint (or a tunneled local URL via something like

ngrok). Once saved, you can select that connector in a chat and ChatGPT will call your MCP tools as needed.

From there, you generally have two paths to adoption:

Manual config: Wire these servers into your editor or chat client one by one using the steps above. It’s flexible and works everywhere, but it often involves managing JSON files, installing Python or Node.js runtimes, and handling API keys manually.

The easy way (integrated environments): Tools like Builder Fusion are moving toward "click-to-connect" MCP tools and curated stacks, where you connect Git, Figma, Supabase, and friends once and every agent in that workspace can use them without extra setup.

Once you’ve picked a few servers from this list, lock in some guardrails so your agents don’t accidentally nuke prod. At a practical level:

- Treat each MCP server like a microservice with its own blast radius. Put it behind TLS, require auth between client and server, and scope API keys so a compromised config file can’t see everything.

- Grant the minimum privileges needed. High‑impact servers (filesystem, terminal, databases, Stripe) should start in read‑only or sandboxed modes until you’ve observed real usage patterns.

- Handle secrets and isolation like any other production system. Use a secrets manager instead of hard‑coding keys in MCP JSON, run risky servers in containers or remote sandboxes, and log who called which server with which arguments.

- Start read‑heavy, then add writes with a rollback plan. Begin with documentation, search, and observability servers. Add write access only when there’s a clear business case and a way to undo mistakes.

For quick local experiments on your own machine, you can just… go wild. But once agents can touch shared data or money, treat MCP like any other piece of production infra.

The MCP ecosystem is moving fast. The "best" server is simply the one that solves your specific AI-related exhaustion.

If you're tired of copy-pasting code, install the GitHub MCP. If you're tired of explaining your database schema, install the Postgres MCP. And so on, so forth.

Pick one server from this list and install it today.

While individual MCP servers are powerful, the future is specialized environments like Builder Fusion that bake these connections into a visual editor, so you don't just chat with your tools. You build with them.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo